Tech Shadow Work launches in 2026 as a biweekly newsletter. Each issue uses a recent moment in technology or tech policy to examine its shadow, asking what these decisions reveal about societal power dynamics. Subscribe to receive updates by email.

Hi, if you’re receiving this email, it’s because you subscribed to my website at some point over the years. While I’ve published writing there, I haven’t sent this long-promised newsletter. In 2026, that changes.

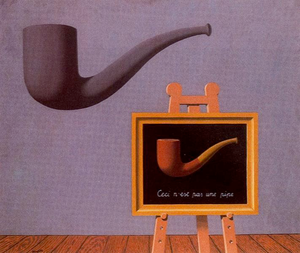

Tech Shadow Work is a concept I have been thinking about ever since I saw this meme. Inspired by Carl Jung's idea of shadow work, I am launching this newsletter to create a space to explore the darker, often unspoken societal beliefs and forces steering technology and tech policy development. Technology, in and of itself, rarely creates wholly new problems. More often, it formalizes, accelerates, or disguises harms that someone with power benefits from and seeks to maintain.

Like many influencers' interpretations of Jung, I’m keeping my own reading of the “shadow” loose, so that I am free to directly call out the darkness in all its forms, whether it takes the shape of political economy critiques, intractable political problems, or the various psychological tolls of our online times. The goals of this project are twofold: (1) to integrate hard-hitting politics back into our “bipartisan,” watered-down tech policy conversations, which are often relegated to generalized, sanitized, abstract discussions about data parameters, controls, and processes; and (2) to make me (and hopefully you) feel better, by providing a reprieve from the overly technical legal and policymaking discourse and the catharsis of naming the elephant in the room.

To give you a sense of what I might cover in Tech Shadow Work, here are a few stories from the last week that I noticed carried a dark shadow that was not being explicitly called out:

When REAL ID Isn't Real

The Department of Homeland Security stated in a December 11 court filing that "REAL ID can be unreliable to confirm U.S. citizenship." This follows other recent instances in which ICE has renounced prior government-recognized identification and immigration status with its own technical tools and interpretations. In October, ICE officials told members of Congress that "an apparent biometric match by Mobile Fortify is a ‘definitive’ determination of a person’s status and that an ICE officer may ignore evidence of American citizenship—including a birth certificate.” And in November, ICE officers reportedly rejected tribal IDs—despite their recognition by federal agencies—calling them fake before detaining three Indigenous family members.

Identity verification discussions are increasingly treated as a technical problem of better matching parameters, leaving unspoken that identification is an apparatus of sorting and exclusion, designed by those in power to execute their political ideas of deservedness. These recent accounts of ICE picking and choosing which identification serves its detention goals offer an extreme reminder that the real debate over identity is about who gets to decide who deserves what.

Mourning the MetroCard

On December 31, 2025, New York City’s subway system stopped selling its iconic magnetic-strip MetroCard. The sunsetting of the MetroCard follows a familiar “modernization” script. We are told the change is about convenience and efficiency, and are not asked how we feel about the expanded tracking, privatization, or cost structure of public goods.

Technocrats may present less card printing, fewer wasted “unlimited” rides, and fintech integration, alongside fare hikes kept below inflation, as small wins the patron should be excited about. Obscured by the “logical upgrade” rhetoric are the costs of change management, the risks of data tracking exposure, the loss of being able to swipe in another rider as an act of camaraderie, and the public funds now diverted to tolling operations and enforcement instead of improving the accessibility, timeliness, and resilience of the transit system.

I wrote about a common fallacy in civic technology communities, where upgrades are treated as apolitical and small UX improvements are trotted out as harm-reducing wins, despite enabling systems that do not serve the people, in Holding Out for Something Better, a piece on public-benefit software modernization.

The New York Transit Museum recently launched a new exhibit honoring the MetroCard as part of the “evolution” of New York City transit tolling. I would like the museum to one day describe that evolution as having moved from tokens, to the MetroCard, to OMNY, to something truly evolutionary: fast, free transit paid for by taxing the rich. On our current trajectory, the next tolling upgrade we will be offered is face scans mimicking license plate scanners. If that day comes, I hope you’ll say with me that we are holding out for something better.

The Bleakness of Letting GAI Name Your Baby

There are a lot of very serious and troubling stories related to generative AI nowadays and this one is too. Carroll County’s firstborn baby in 2026 was named with the help of ChatGPT. The parents said, "We were looking up on ChatGPT boy names that go well with the last name Winkler. And then once we found Hudson Winkler, we’re like, 'give us a good middle name.'"

This story has layers of bleakness. Mechanically, the most frequently searched dataset on Data.gov for years was Baby Names from Social Security Card Applications precisely because choosing a baby name is the quintessential long-list research problem. You look through options and weigh them against your own secret sauce of associations and connotations: Did your grandparents have that name? Does it carry baggage from your own life? Do you like its meaning, its sound, the way it feels to say out loud?

Naming a child is a decision that carries emotional and symbolic weight. To name your child is to give them a word that will follow them through the world, shaping how others address them and how they understand themselves. Delegating that choice to a generative model signals a quiet surrender of one of the most tender, meaningful decisions humans make.

In future issues, I’ll usually pick one topic like the above and explore it more deeply. I plan to post here ~biweekly. Please send me any tips you think are worthy of a "shadow" examination, and thank you for following along.